LLM Observability

The most robust observability solution for large language models (LLMs) in production. Evaluate LLM responses, pinpoint where to improve with prompt engineering, and identify fine-tuning opportunities using vector similarity search.

Detect Problematic Prompts & Responses

LLMs can hallucinate, provide incorrect information or suboptimal responses, incur bad retrieval, among many things that can go wrong.

Monitor your model’s prompt/response embeddings performance. Using LLM evaluation scores and clustering analysis, Arize helps you narrow in on areas your LLM needs improvement.

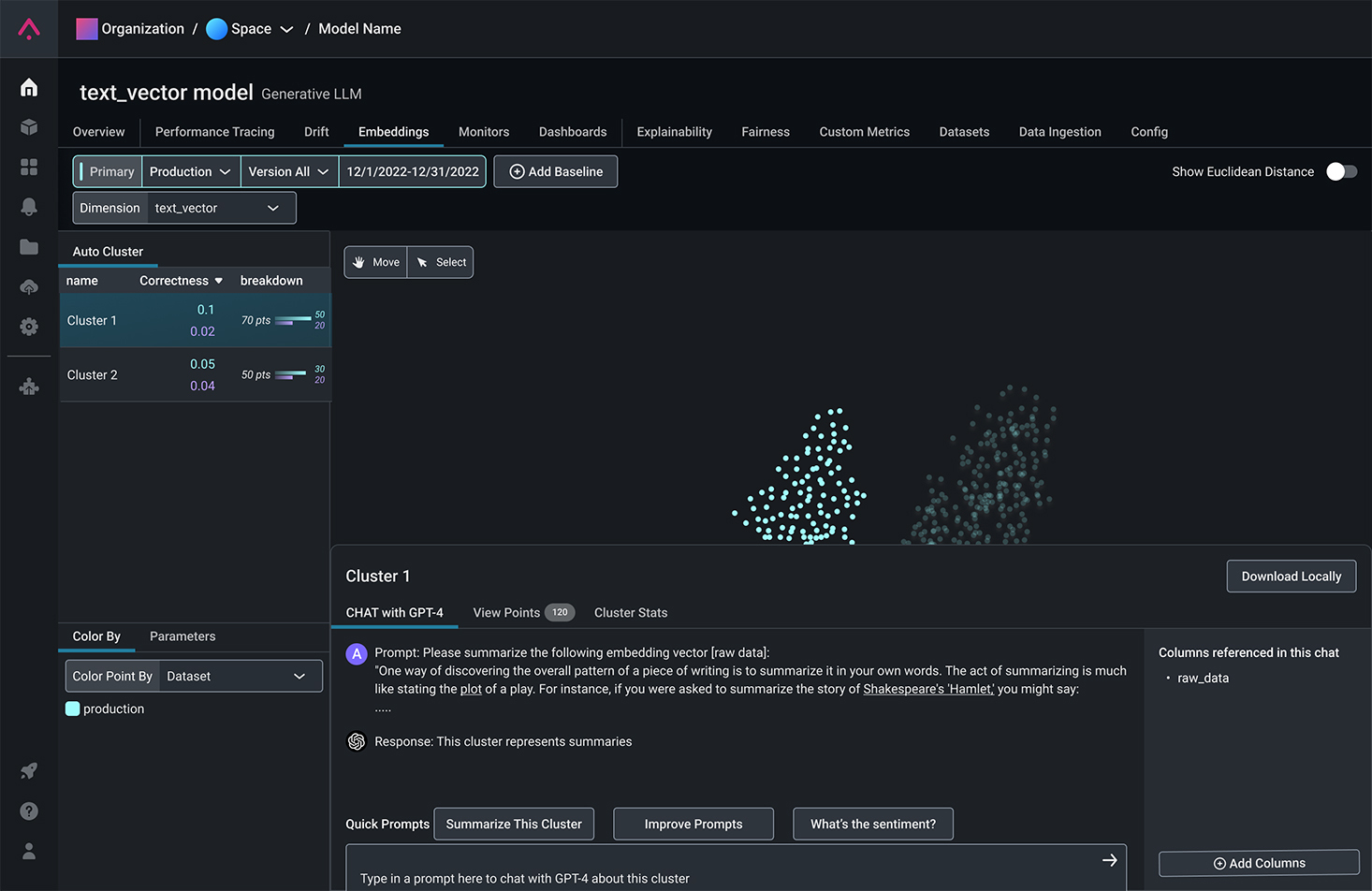

Analyze Clusters Using LLM Evaluation Metrics & GPT-4

Automatically generate clusters of semantically similar datapoints and sort by performance. Arize supports LLM-assisted evaluation metrics, task-specific metrics, along with user feedback.

An integration with ChatGPT enables you to analyze your clusters for deeper insights.

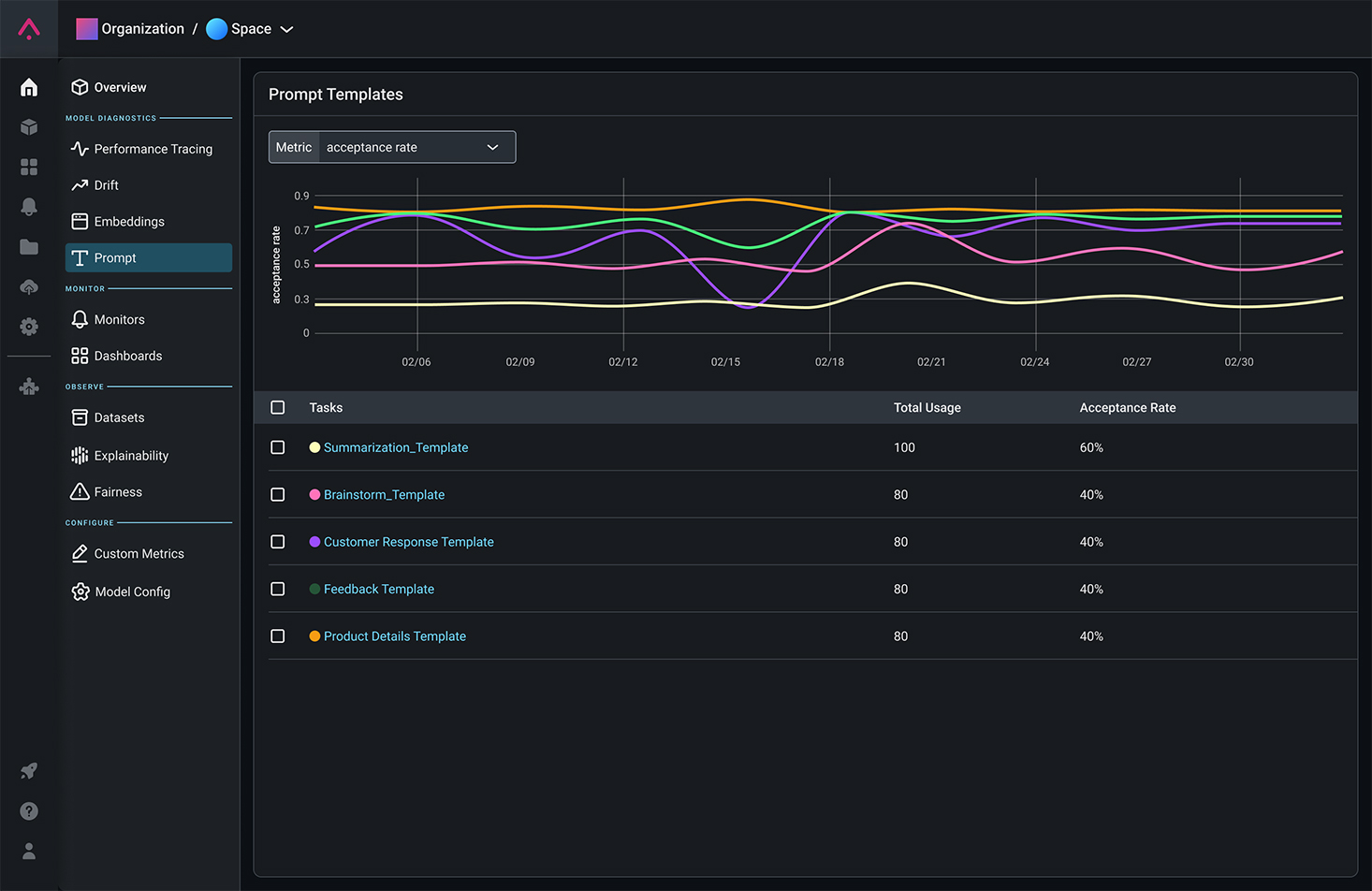

Improve Your LLM Responses with Prompt Engineering

Pinpoint prompt/response clusters with low evaluation scores.

Generative workflows suggest ways to augment prompts to help your LLM models generate better responses.

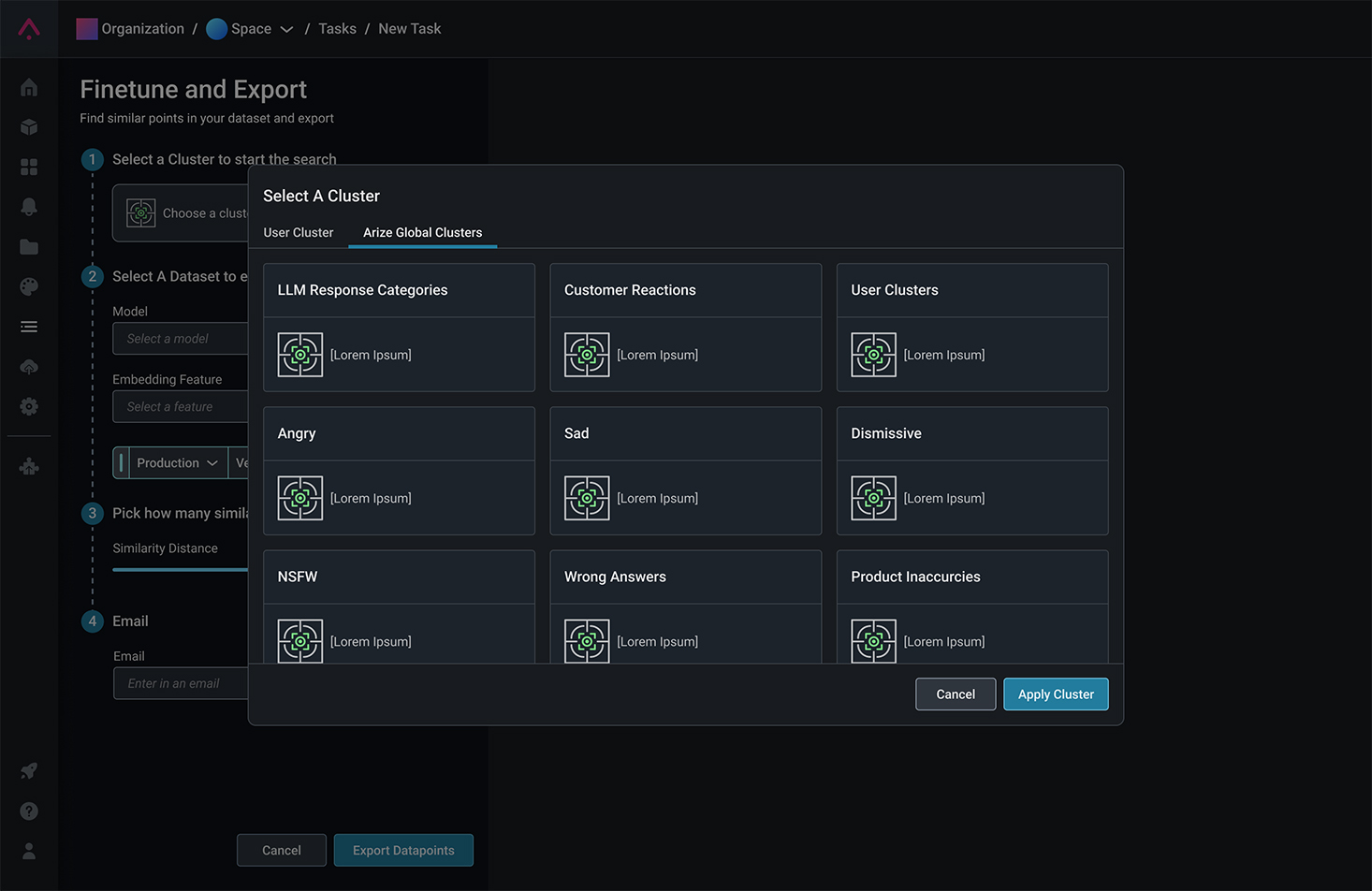

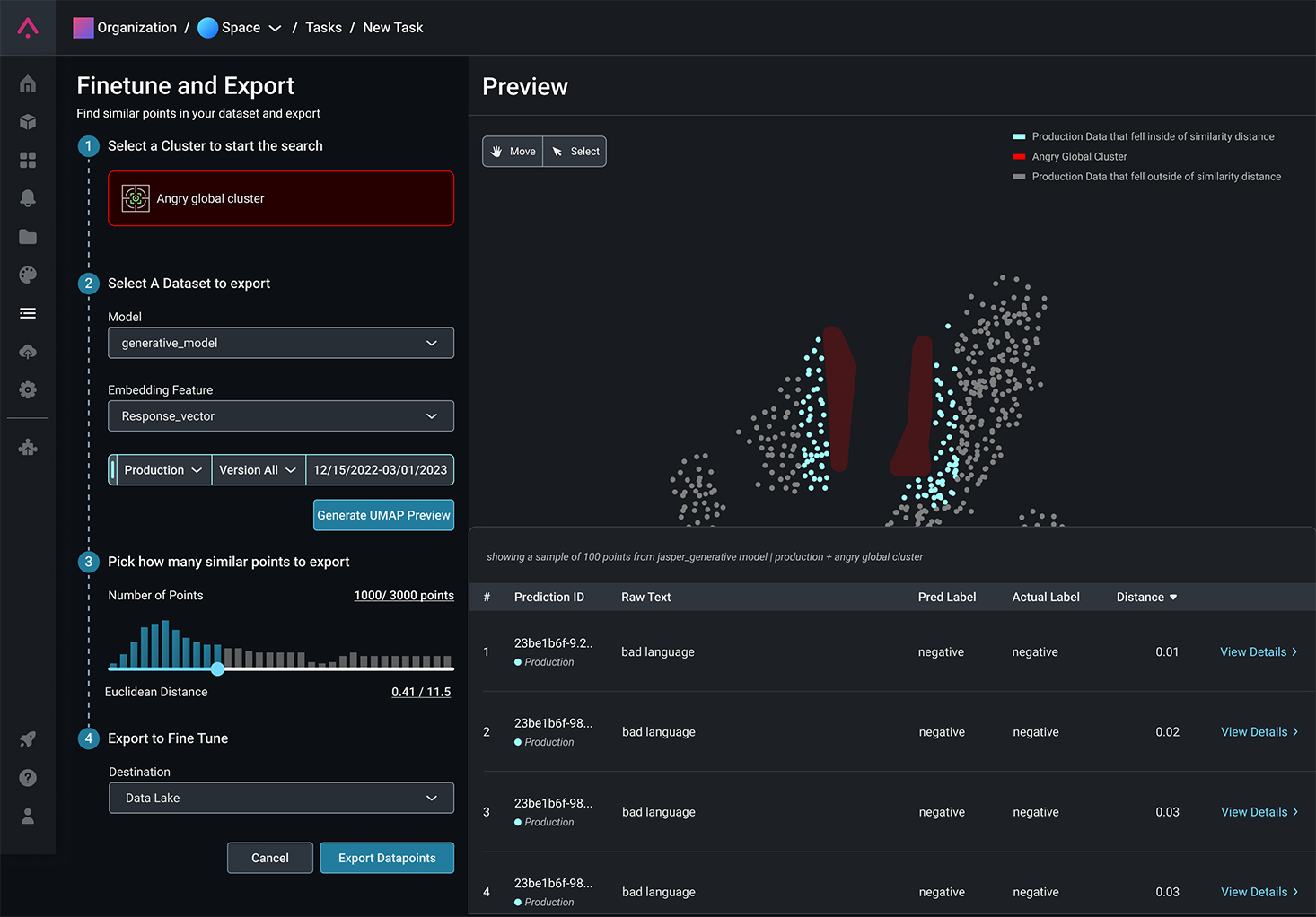

Fine-Tune Your LLM Using Vector Similarity Search

Find problematic clusters, such as inaccurate or unhelpful responses, to fine-tune with better data.

Vector-similarity search clues you into other examples of issues emerging, so you can begin data augmentation before they become systemic.

Pre-Built Clusters for Prescriptive Analysis

Use pre-built global clusters identified by Arize algorithms, or define custom clusters of your own to simplify RCA and make prescriptive improvements to your generative models.